WP Robots Txt

- Dubai Seo Expert

- 0

- Posted on

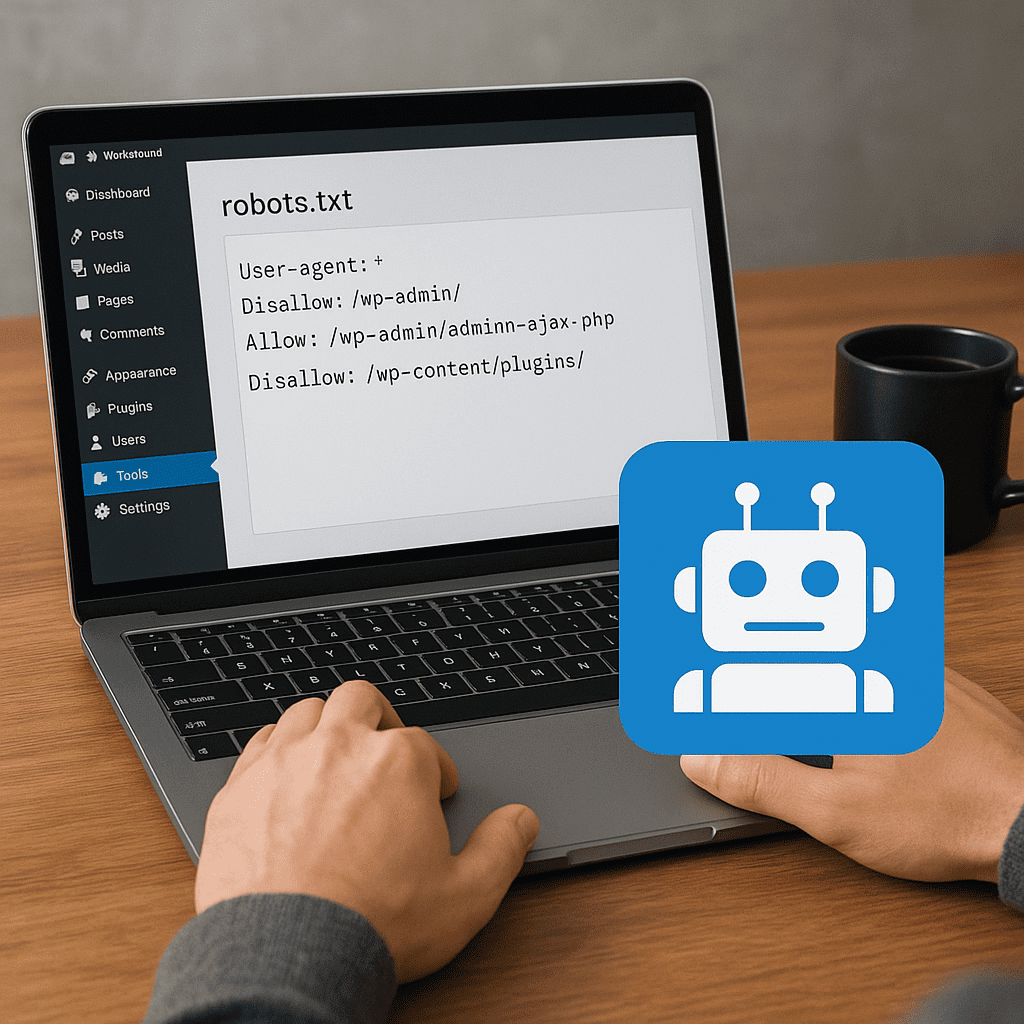

WordPress sites live and die by how well they communicate with search bots, and one of the most overlooked levers is the humble robots.txt file. WP Robots Txt is a plugin designed to put that lever within easy reach of non-technical site owners and developers alike, offering a clean interface to create and manage a robots.txt that fits your site’s goals without risky edits over FTP. Understanding what the plugin does, what robots.txt can and cannot do, and how it affects visibility is the key to using it responsibly and profitably.

What WP Robots Txt actually is

WP Robots Txt is a WordPress plugin that exposes a settings screen where you can edit the rules delivered at yoursite.com/robots.txt. Depending on your hosting and file permissions, it can either write a physical file in your web root or serve a virtual version through WordPress. The big advantage is versioned, auditable control from the dashboard, often with convenient features like environment presets, per-bot sections, and one-click insertion of common WordPress-safe directives.

Because robots.txt is requested on every crawl session, it’s the front door sign for automated agents that want to explore your site. Getting this file right prevents accidental blocking of important pages, reduces wasteful bot activity, and sets up crawlers to find the most important content fast. The plugin’s point is not to “do SEO for you,” but to make it simple to express crawl policy in a way that aligns with your content and infrastructure.

How robots.txt works in WordPress

At its core, robots.txt is a plain-text set of rules that tell bots which paths they may or may not request. It is interpreted per bot: each section defines a user agent and the path directives that apply to it. Priority is handled top-down; the most specific matching block applies. In WordPress, a default virtual robots.txt is served if no physical file exists, typically allowing almost everything except sensitive administrative paths.

These are the essential concepts to keep in mind:

- The file must live at the root of your host (example.com/robots.txt) to be respected by well-behaved bots.

- Rules operate on URL paths, not the underlying filesystem.

- Wildcards and anchors (like * and $) are widely understood, though historically not part of the original standard. Major crawlers support them.

- The file is advisory, not an access-control mechanism: bad actors can ignore it. Never treat it as security.

- Blocking a URL in robots.txt doesn’t guarantee it won’t appear in search; if a blocked URL is linked elsewhere, it can still be indexed without a snippet. To stop indexing, use noindex methods on the page or header-level directives.

A quick vocabulary gem-set worth mastering: robots.txt governs crawling and affects discovery but not ranking directly; indexing is the act of saving content for retrieval by search engines; your sitemap helps bots find canonical URLs efficiently; a User-agent block directs policy to a specific crawler; the allow and disallow lines carve out path access; correct canonical signals coordinate with crawl policy; and everything supports the larger goal of SEO.

What the WP Robots Txt plugin brings to the table

While you can create a robots.txt file by hand, the plugin adds convenience and guardrails:

- Dashboard editing: manage rules from Settings without FTP or shell access.

- Virtual or physical file: generate a dynamic robots.txt through WordPress or write a file if your host allows it.

- Prebuilt templates: insert WordPress-safe defaults (e.g., block administrative paths while allowing critical AJAX endpoints).

- Per-bot sections: tailor rules for Googlebot, Bingbot, AdsBot, image or video crawlers.

- Multisite support: propagate network-wide rules with site-level overrides.

- Conditional rules: maintain different policies for staging and production (e.g., block all crawling on staging).

- Automatic sitemap detection: surface the Sitemap line(s) pointing to your XML sitemaps.

- Change history: log edits for accountability and rollback.

- Import/export: move policies between projects or store in version control.

- Compatibility helpers: interoperate with popular SEO suites that also manage robots.txt to prevent conflicts.

These features vary by version and vendor; always review the plugin’s readme and changelog to match your requirements. The broad idea remains: give you enough power to express policy clearly, and enough structure to avoid common mistakes.

Default WordPress-friendly directives you should know

Most sites benefit from a predictable baseline. WordPress core used to serve a very permissive virtual robots.txt. Mature sites tend to customize it like this:

- User-agent: *

- Disallow: /wp-admin/

- Allow: /wp-admin/admin-ajax.php

- Sitemap: https://example.com/sitemap_index.xml

This baseline blocks crawl access to administrative screens (which are useless to bots) while allowing the AJAX endpoint many themes and plugins rely on. What not to do: block /wp-content/ or /wp-includes/ outright; modern search crawlers fetch CSS and JS to render pages properly, and blocking these folders can harm understanding and coverage.

Realistic configuration scenarios

1) A content-driven blog on a single domain

Goal: ensure efficient crawl coverage and avoid wasting crawl budget on tag archives and query-parameter duplicates.

- User-agent: *

- Disallow: /wp-admin/

- Allow: /wp-admin/admin-ajax.php

- Disallow: /?s=

- Disallow: /*?replytocom=

- Sitemap: https://example.com/sitemap_index.xml

Explanation: search URLs (/ ?s=) and replytocom parameter duplicates do not add value; let canonical pages represent the content. Leave category and tag archives crawlable only if they’re well curated and valuable; otherwise consider adding a noindex meta tag instead of blocking in robots.txt if those pages need to be crawled to see the meta directive.

2) An eCommerce store

Goal: protect checkout flows, filter combinations, and customer account areas while keeping product and category content fast to discover.

- User-agent: *

- Disallow: /cart/

- Disallow: /checkout/

- Disallow: /my-account/

- Disallow: /*?add-to-cart=

- Disallow: /*?orderby=

- Disallow: /*&orderby=

- Allow: /wp-admin/admin-ajax.php

- Sitemap: https://example.com/sitemap_index.xml

Explanation: transactional paths and sort/filter parameters can explode URL counts. Consider pairing this with facet management: keep final, indexable filtered URLs canonicalized to the base listing to concentrate signals.

3) A multilingual site with subdirectories

Goal: make sure crawlers find language sitemaps and don’t waste time on translator previews or duplicate directories.

- User-agent: *

- Disallow: /wp-admin/

- Allow: /wp-admin/admin-ajax.php

- Sitemap: https://example.com/sitemap_index.xml

- Sitemap: https://example.com/en/sitemap_index.xml

- Sitemap: https://example.com/de/sitemap_index.xml

Explanation: one Sitemap directive per sitemap location is fine; multiple lines are accepted. Ensure your hreflang annotations and canonical tags are consistent with the URL structure.

4) Staging and development environments

Goal: prevent test sites from being crawled or indexed.

- User-agent: *

- Disallow: /

Important: blocking crawling alone is not sufficient to prevent indexing if URLs leak. Pair this with HTTP authentication on the whole site or use a noindex X-Robots-Tag at the server level for all responses. The plugin can help set Disallow, but server protection is the gold standard for staging.

Does the plugin improve SEO?

Indirectly. The robots.txt file doesn’t boost rankings by itself. What it does is optimize the crawl: it funnels bots toward pages that matter and away from noise. If a site has thousands of low-value parameter URLs that consume crawler attention, a careful robots.txt can preserve crawl budget for new or updated content. That can speed up discovery and, in practice, help competitive pages enter the index faster.

But beware the flip side: an overzealous policy can starve crawlers of resources needed to render pages, or block access to pages that carry critical signals. If your rankings drop after a robots.txt change, check whether you’ve blocked resources like theme CSS, JavaScript, or critical images. Also remember that if you Disallow a URL, you can’t rely on on-page noindex to keep it out of search, because crawlers will not fetch the HTML to see that directive.

Best practices with WP Robots Txt

- Start with a small, deliberate policy: block only what you are confident is safe to block.

- Always allow resources required for rendering (CSS/JS in themes and plugins).

- Reference your XML sitemap(s) explicitly to help discovery.

- Use canonical tags within the site to guide consolidation; robots.txt is not a substitute for canonicalization.

- Prefer on-page or header-level noindex to remove URLs from search results.

- For staging, enforce HTTP auth or IP allowlists. Use Disallow: / as a secondary layer, not the primary shield.

- Document and date each change in the plugin; annotate why a rule exists so teammates understand its purpose.

- Test after changes with multiple crawlers and tools to confirm the policy behaves as intended.

Common mistakes the plugin helps you avoid

- Blocking the entire site on production by leaving a staging policy in place. Solution: use environment markers and double confirmation for Disallow: /.

- Disallowing /wp-content/ or /wp-includes/ globally. Solution: allow necessary assets; block only specific wasteful paths.

- Relying on robots.txt to hide confidential content. Solution: move secrets out of web root, gate behind authentication.

- Assuming Crawl-delay works everywhere. Google ignores this directive; tune server resources and rate limits instead.

- Conflicting directives across plugins. Solution: choose a single source of truth for robots.txt management to avoid duplicates.

Advanced techniques with WP Robots Txt

Per-bot tuning

Sometimes you want Googlebot to have full run of the site but slow down other agents. With per-bot sections you can specify, for example, that AdsBot or image crawlers behave differently. Keep a single User-agent: * block at the top and more specific agents below it for those special cases.

Handling parameters

Robots.txt can prune common parameter URLs that balloon crawl waste. Focus on parameters that never produce unique content: sort, session, tracking, pagination duplicates. Keep in mind that some parameters might be essential to user experience or analytics; block carefully. For complex parameter handling, consider URL parameter tools in Bing Webmaster Tools and robust canonical linking on your pages.

One site, many sitemaps

It’s common for a site to have multiple sitemap files: a sitemap index, component sitemaps for posts, pages, products, and sitemaps generated by multilingual plugins. Listing each in robots.txt is fine. The plugin can auto-detect locations or let you add multiple lines manually.

Complementing with X-Robots-Tag

To prevent indexing of file types like PDFs or images or to deindex a path pattern, inject response headers using your server or a headers plugin. Robots.txt cannot remove already indexed URLs; a header or meta robots noindex is required for deindexing. Consider this a layered policy: robots.txt prunes crawl paths, headers govern index status, and canonicals consolidate signals.

Compatibility with Yoast SEO, Rank Math, and others

Popular SEO plugins often include a robots.txt editor. Running multiple editors can cause conflicts or unexpected overwrites. Decide which plugin will own robots.txt and disable the others’ editors. WP Robots Txt typically coexists peacefully if you follow one-owner discipline and review the final file after changes from any plugin that might regenerate it (for example, after a major SEO plugin update or migration).

If you use a security or hardening plugin that writes to the filesystem, confirm that it doesn’t block writing a physical robots.txt. If your host uses a read-only web root, use the virtual mode provided by WP Robots Txt.

Performance and security considerations

Robots.txt is a tiny file and practically free to serve, but a virtual robots.txt still routes through WordPress. With good page caching or an edge CDN, the request will be served from cache without invoking PHP. If your site is high-traffic and bot-heavy, prefer caching robots.txt at the edge for efficiency. On security, remember the file is public: do not list sensitive directories hoping bots won’t look; treat it as a polite suggestion only.

Testing and monitoring your configuration

- Fetch the file directly in your browser to ensure it returns HTTP 200 and the expected content.

- Use the URL Inspection tool in Google Search Console to check whether a specific URL is crawlable or blocked by robots.txt.

- Use Bing Webmaster Tools’ robots tester for quick, per-line evaluation.

- Monitor server logs or analytics for bot behavior changes after an edit.

- Track coverage reports in Search Console to see if Discovered but currently not indexed or Crawled — currently not indexed trends improve after pruning parameter waste.

- Set reminders to review the policy after theme/plugin updates that change asset paths.

Alternatives to WP Robots Txt

- Manual file editing via FTP or your host’s file manager, for full control if you’re comfortable with deployment workflows.

- Robots editors bundled in SEO suites (Yoast SEO, Rank Math, All in One SEO) if you prefer an all-in-one tool.

- Server-level config for X-Robots-Tag headers and redirects, which complement but do not replace robots.txt.

The choice depends on your team, hosting, and processes. WP Robots Txt focuses on doing one job well: managing robots.txt from the WordPress dashboard with clarity.

Opinion: when WP Robots Txt shines and when to skip it

If your workflow benefits from a single, predictable place to manage crawl policy—and you want non-developers to handle routine edits—WP Robots Txt is a low-friction win. The interface reduces the risk of typos, keeps a history of changes, and offers default templates that keep you from blocking critical assets. For agencies and multisite networks, the ability to propagate a baseline and tweak per site is practical and time-saving.

On the other hand, if your deployment process already tracks a physical robots.txt in git and you prefer file-based config with CI/CD gates, a plugin may not add much. Similarly, if you rely on a comprehensive SEO suite that already manages robots.txt and you’re disciplined about conflicts, consolidating under one plugin can be simpler than adding another widget to your stack.

Verdict: the plugin is aptly focused, safe when used with care, and genuinely helpful for many WordPress owners. Its impact on organic performance comes from enabling better policy—not from magic. Used alongside solid technical hygiene, it’s a sensible part of an optimization toolkit.

Frequently asked questions

Can robots.txt block specific file types like PDFs?

Yes, by path or extension. However, this only stops crawling, not indexing via external links. To prevent indexing, add X-Robots-Tag: noindex to those file responses at the server level.

Should I add Crawl-delay?

Google ignores it; Bing and Yandex may honor it. If you’re experiencing load issues due to bots, manage via server rate-limiting, CDN rules, or bot verification rather than relying solely on Crawl-delay.

How many Sitemap lines can I have?

As many as needed. Point to a sitemap index to simplify. Ensure the URLs are absolute and accessible.

Is it okay to block tag or category archives?

If they add little value, consider adding noindex on-page and leaving them crawlable so engines see the directive. Blocking in robots.txt prevents the crawler from reading the noindex tag.

What about images and CSS/JS?

Do not block resources needed to render and understand pages. Modern rendering relies on CSS/JS, so blocking them can harm coverage and interpretation.

A practical workflow to adopt

- Define site goals: which sections are index-worthy pillars, which are utility, which are sensitive.

- Draft a minimal policy in WP Robots Txt, starting from a known-safe template.

- List your sitemaps explicitly.

- Review assets: confirm CSS/JS and images integral to rendering are accessible.

- Deploy to staging first; test with URL-specific checks and bot simulations.

- Roll out to production; monitor logs and coverage for two weeks.

- Iterate: adjust parameter rules, refine per-bot exceptions, and document changes.

Conclusion

Robots.txt is a small file with outsized influence on how bots expend effort on your site. The WP Robots Txt plugin turns a historically fiddly, error-prone task into a manageable, documented process that fits neatly into WordPress. When combined with sane defaults, careful allowances for rendering resources, explicit sitemaps, and complementary signals like canonical and noindex, it becomes an effective control surface for crawl efficiency. Use it to keep bots focused on what matters, protect transactional flows, keep staging out of sight, and preserve the agility to adapt as your content and infrastructure evolve.